New protections for AI whistleblowers?

Last Week in AI Policy #19 - May 19, 2025

Delivered to your inbox every Monday, Last Week in AI Policy is a rundown of the previous week’s happenings in AI governance in America. News, articles, opinion pieces, and more, to bring you up to speed for the week ahead.

Senate Judiciary Committee Chair Chuck Grassley (R-IA) introduced the bipartisan AI Whistleblower Protection Act on Thursday.

The bill aims to protect employees who disclose concerns surrounding security and safety, combating the current use of strict non-disclosure agreements by AI firms.

Grassley commented that protecting whistleblowers is essential to “ensure Congress keeps pace as the AI industry rapidly develops”.

Co-sponsor Rep. Jay Obernolte (R-CA) added that this measure is not simply a matter of workplace fairness, but national security.

The House Energy and Commerce reconciliation bill was proposed on Sunday and contains a provision that would prevent states from enforcing “any law or regulation” relating to AI models, or automated decision systems for 10 years.

GOP proponents of the bill have made the case that it strengthens the US in the face of Chinese competition and prevents “patchwork” regulation, allowing for a 10-year “learning period.”

Meanwhile, on Friday, a group of 40 bipartisan state attorneys general wrote a letter to Congress opposing this move.

States cannot be forced to repeal any current legislation, but under the bill, it would be an offense to implement it.

President Trump Dismissed Copyright Chief After Report Questioning AI Fair-Use Practices

Register of Copyrights, Shire Perlmutter was fired on Saturday, May 10.

Appointed in October 2020 during President Trump’s first term by now former Librarian of Congress, Carla Hayden, who appointed Perlmutter during Trump’s first term, was also fired just a couple of days prior.

This decision came after Perlmutter’s office released part three of a lengthy report earlier in the week, which raised questions about the use of copyright materials by AI companies.

The report questioned whether the continued and increased use of copyrighted material by AI companies will necessarily lead to “real world improvements in utility”.

Rep. Joe Morelle (D-NY), ranking member of the Committee on House Administration labelled the move “a brazen, unprecedented power grab with no legal basis.”

While the President is permitted to remove the Register of Copyrights, Morelle pointed to the fact that this move came after Perlmutter refused to “rubber stamp Elon Musk’s efforts to mine troves of copyrighted works” for AI training purposes - referring to the report in question.

The bill cleared the floor on Monday following its introduction in February by Assembly Member Rebecca Bauer-Kahan.

The legislation mandates that GenAI developers document any copyrighted material used in training datasets.

It requires that developers allow rights owners to request information about the use of their material via their webpage, unless the information is already made free and easy to access.

If the developers do not comply with these measures within 30 days, rights owners are entitled to bring civil action against the developer.

The legislation does not apply to noncommercial academic, or governmental uses or research.

BIS Issued Guidance Following Rescission of AI ‘Diffusion’ rule

The Trump administration last week announced plans to abandon the Biden-era AI ‘diffusion’ rule that was set to come into effect May 15.

This week the Bureau of Industry and Security have published guidance that they say will help to strengthen existing export controls for overseas AI chips.

The three-part guidance aims to:

Alert Industry to the risks of using PRC manufactured chips, such as Huawei’s Ascend range. The document warns that the use of these chips is in violation of GP10 restrictions and could lead to substantial criminal penalties.

Warn the public about the potential consequences of allowing US AI chips to be used for training and inference of Chinese AI models. The directive emphasizes the risk that such transactions could lead to the enhancement and development of WMDs.

Provide assistance to industry in identifying transactional and behavioral ‘red flags’ that may indicate potential diversion of their chips into the hands of restricted countries or companies. It also outlines best practice for companies who identify subversive tactics.

The Trump Administration is yet to detail how they intend to regulate exports or how it will differ from the Biden administration’s plans.

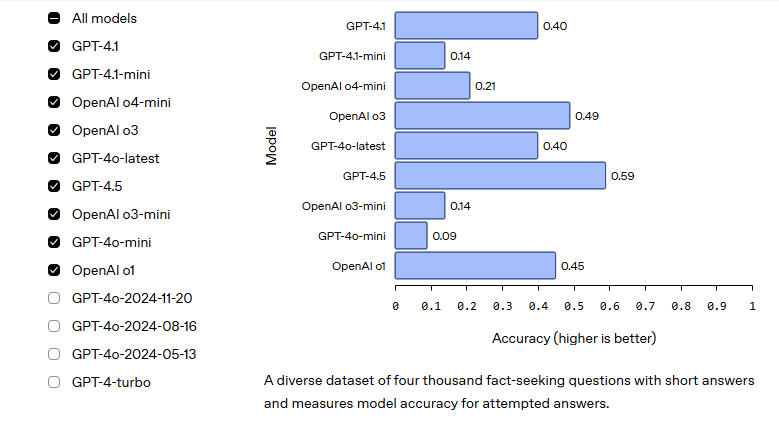

The company promised to publish model safety reports more regularly, with the announcement of a ‘Safety Evaluation Hub’ on Wednesday.

The hub allows users to view internal safety evaluations for all existing models across a range of metrics including harmful content, jailbreaks, hallucinations, and instruction hierarchy, as pictured below.

Interactive graph from OpenAI’s Safety Evaluation Hub showing accuracy for each of their existing models (May 14, 2025) (Source)

This move suggests that OpenAI is reading the room after recently drawing negative attention for their shortcomings on safety.

Press Clips

SemiAnalysis on the US trade deals with UAE and KSA, and what it means for AI. ✍

Garrison Lovely on OpenAIs sleight of hand tactics and their letter to California Attorney General Rob Bonta. ✍

Henry Josephson in Epoch AI on the scalability of algorithmic advances and their implications for AI progress. ✍

Vikram Sreekanti and Joseph E. Gonzalez explore the inevitability of AI system failures in The AI Frontier. ✍

Lynette Bye on the importance of transparency.

Bruce Andrews sits down with ChinaTalk to discuss the future of US industrial policy. 🔉

Harry Booth writes about OpenAIs o3 model’s successes… at cheating. ✍

Jack Clark’s ImportAI newsletter covering Amazon’s sorting robot, Huawei’s new MoE model, and how third-party compliance aids AI safety. ✍